Executive Summary

Macrosoft’s new product, Web MineR, is a state-of-the-art web scrapping cloud application. It has over 25 best-in-class capabilities, and is highly scalable, efficient, secure and configurable. The list of major features continues to grow, as shown in the latest Roadmap posted on Macrosoft’s web site. In addition, several recent papers on Macrosoft’s web site discuss in detail these 25 major capabilities, and how they compare to other products in the marketplace.

This paper starts a dialogue on some of the newest functionality to Web MinerR which are centered in the area of NLP, specifically text summarization and topic modeling. These are clearly important features to have in a web scaping technology. We expect these capabilities to be fully available by second quarter 2021. The purpose of this paper is to describe the research into NLP we are now doing to make sure these capabilities perform well when delivered in Web MineR.

Text summarization and topic modeling are two of the most prominent use cases in the field of Natural Language Processing (NLP). Text summarization allows one to understand the basic idea of a block of text without having to manually read and summarize the document. Topic modeling on the other hand provides the ability to extract main topics from a block of text and thus get the key ideas of the paper, again without manually reading it.

That is at least the concept behind these two important NLP capabilities. Certainly, the technologies are getting better with time, as AI/NLP continues to move forward at a tremendous pace.

How well they actually work today for text extracted from a wide range of web sites (in many different languages) is still an open issue in our minds. For sure it is critical to know how to best configure and use the NLP algorithms we select to use in these two areas in order to get the best possible out of them. Hence, that is the goal of our current research in this area and below we report on some of our findings to date.

We are doing this testing and research in order to ensure they are reliable when we incorporate them into our web scrapping system. Web MineR is built to scrape public information off web sites at ultra-high throughput capacity. The addition of text summarization and topic modeling to the tail end of the Web MineR process would be an obvious major benefit to our user community.

This paper contains a detailed background on both these subjects – text summarization and topic modeling – along with some results of trials and case studies of different off-the-shelf NLP systems and algorithms we have tried out so far. We expect to continue this testing and research through the end of the year.

The tests we show here are on healthcare-related documents. In addition to English, we show how these capabilities can be applied to Mandarin language documents as well. Overall, our research and testing results show reasonably good performance using NLP methods to extract summaries and topics from documents. We feel strongly this will add useful new capabilities to Web MineR. Stay tuned!

Text Summarization

Text summarization is the task of creating a concise and accurate summary that represents the most critical information and has the basic meaning of a longer document’s content. It can reduce reading time, accelerate the process of researching for information, and increase dramatically the amount of information t found within an allotted time frame. Text summarization can be used for various purposes such as medical cases, financial research, and social media marketing.

Text summarization can broadly be divided into two categories: Extractive summarization and abstractive summarization.

- Extractive summarization is to identify and extract essential sentences from the original text and stack them together as a summary.

- Abstractive summarization is to use advanced NLP techniques to generate a new summary. The sentences that are generated through abstractive summarization may not even appear in the original text.

Resources/libraries that are commonly used for text summarization include: Natural Language Toolkit (NLTK) and Spacy, and pre-trained models like Bert and T5. NLTK and Spacy are open source libraries used for NLP on Python. Bidirectional Encoder Representations from Transformers (BERT) is a technique for natural language processing pre-training developed by Google. BERT is pre-trained on a large corpus of un-labelled text, including the entire Wikipedia(2,500 million words) and Book Corpus (800 million words). You can fine-tune it further by adding just a couple of additional output layers to create state-of-the-art models for your specific text mining application. T5 is an encoder-decoder model and converts all NLP problems into a text-to-text format, and it is trained on a mixture of unlabeled text (C4 collection of English web text).

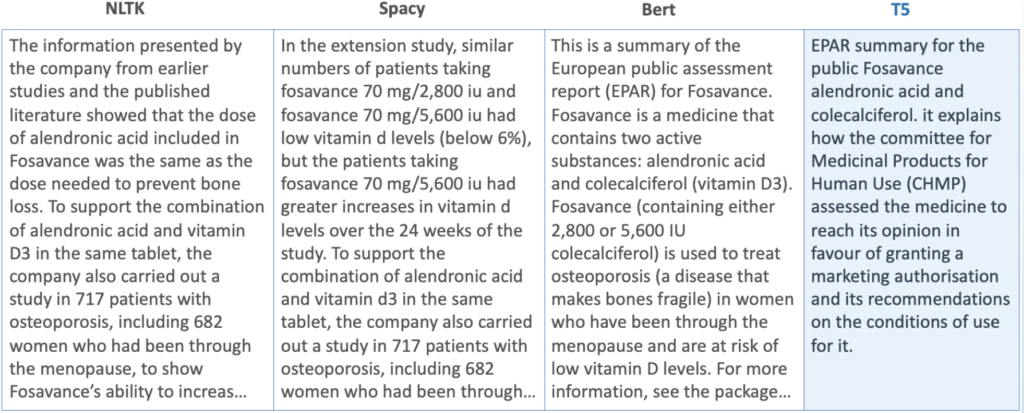

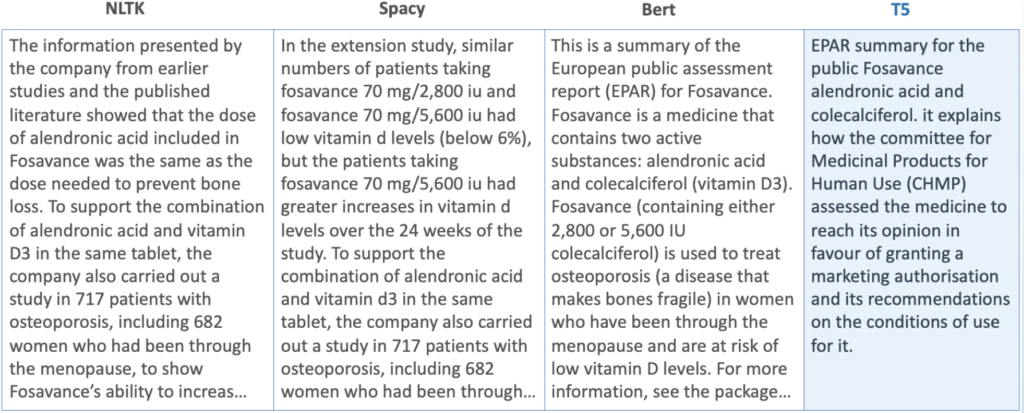

In our test use cases, we used NLTK, Spacy, and BERT for extractive summarization, and we used T5 for abstractive summarization. We applied each of the 4 tools to implement text summarization.

Here is an example that shows the summaries that we generated using the different tools. We first load a text file (PDF format) and then applied the four text summarization methods.

Text summarization by using NLTK library first uses Glove to extract words embedding and then uses cosine similarity to compute the similarity between sentences and apply the PageRank algorithm to get the score for each sentence. Based on the sentence scores it put together the top-n sentences as a summary. Spacy library will tokenize the text, extract keywords, and then calculate the score of each sentence based on keyword appearance. Text summarization using pre-trained model BERT will first embed the sentences and then run the clustering algorithm to finally find the sentences closest to the centroids and use those sentences as the summary. T5 converts all NLP problems into a text-to-text format, and the summarization is treated as a text-to-text problem. The model we used is called T5ForConditionalGeneration, and it loads the T5 pretrained model to extract a summary.

The document we used to implement text summarization is a summary of the European public assessment report (EPAR) for the drug Fosavance. It explains how the Committee for Medicinal Products for Human Use (CHMP) assessed the medicine to reach its opinion in favor of granting a marketing authorization and its recommendations on the conditions of use for Fosavance. After analyzing the 4 summaries, the T5 model gives us the best summary and in particular it only presents the main information contained in the original document and does not go astray.

Topic Modeling

Topic modeling allows us to find a group of topics from a large collection of textual information that best represents hidden topical patterns across the collection. It can understand, organize, and summarize large collections of information for different areas like recommender systems, bio-informatics, and topic tracking systems.

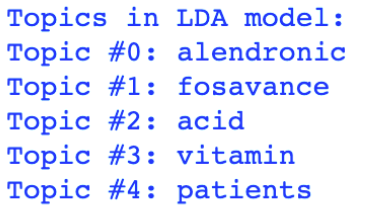

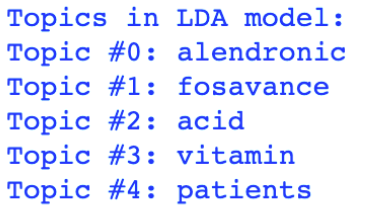

There are two main approaches used for topic modeling: Latent Dirichlet Allocation (LDA) and Non-Negative Matrix factorization (NMF). LDA is a probabilistic model, and NMF is a linear-algebraic model. We used the LDA approach to implement topic modeling for the Fosavance document mentioned above and got the following topics:

Fosavance is a medicine that contains two substances: alendronic acid and vitamin D3. It is used for osteoporosis in women who have been through menopause and are at the risk of low vitamin D levels. The topics LDA generated reflects the key information of the document.

Mandarin Text Summarization & Topic Modeling

Multilingual text summarization is a hot topic in the NLP domain. It can provide information to people who are not able to read or understand a language so that people can take advantage of more information from data in different languages.

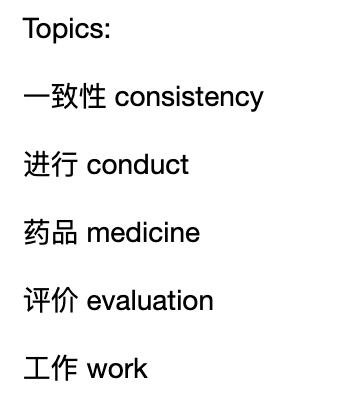

We use two approaches to implement multilingual text summarization. One is to translate multilingual documents to the desired language, then do the same text summarization as discussed above. The other approach is using NLP techniques for multilingual documents directly. Here we use Chinese (Mandarin) to English as an example. The Mandarin document we use here is a government announcement about how to proceed with work for quality- and efficacy- consistency evaluation of a generic drug.

To process Mandarin text summarization, we found some readily available packages. TextRank-for-zh is a python implementation of the TextRank algorithm for the Mandarin language. Nlg-yongzhuo is a toolkit for text summarization, corpus data, extractive text summary of Lead3, keyword, TextRank, text teaser, word significance, LDA, LSI, NMF. Tika is used for reading Mandarin documents. Jieba is used for Mandarin word segmentation.

Here are the summary and the critical topics for the document. It has been generated by using the TextRank algorithm. The summary gets the essential sentences for the document. It is about how to evaluate the consistency of the generic drug and how to choose a test reagent.

Mandarin Translation and Text Summarization

The other approach for Mandarin text summarization first translates the Mandarin document to English by using Google translate. Below is the top of the translated document.

After we get the English text, we apply the same techniques for the English text summarization. Here is the summary generated by BERT:

This method can apply to various languages as long as we can translate the data to English language. Implementing text summarization methods after translation can greatly increase the access to information.

Summary

This paper is a ‘first-look’ at some research we have underway to add NLP capabilities to our web scraping product, Web MineR. With the help of both text summarization and topic modeling, we can quickly filter the essential information from a large amount of data extracted from a web site or from a collection of web sites.

Please keep visiting our web site for updates on continuing research we have going in this area. We believe strongly that the addition of these NLP capabilities, specifically text summarization and topic modeling, will add tremendous new capabilities to our Web MineR.

Give us a call to discuss further what your web scraping needs might be. We would be happy to perform a ‘’test drive’ to compare outputs from our product with those from your existing product or service!

By G.N. Shah, Taha Sharafat, Wenjia Zheng, Ronald Mueller | December 14th, 2020 | Process Automation

Recent Blogs

Advantages of Technology and IT Companies Partnering with Staffing Firms Offering Visa Sponsorship

Read Blog

CCM in the Cloud: The Advantages of Cloud-Based Customer Communication Management

Read Blog

The Rise of Intelligent Automation: A Roadmap for Success

Read Blog

Home

Home Services

Services